Blog

21 April, 2024

June 19, 2022

vRealize Automation Code Stream is a CI/CD tool dedicated to creating pipelines to manage the DevOps lifecycle by building and automating your application’s entire release process.

With Code Stream, we can leverage any service in vRA to be used within a Pipeline, such as deploying Cloud Templates, executing workflows and automation, testing applications, integrating with external tools, and much more.

In order to showcase some of the fantastic things you can do with Code Stream, I will demonstrate a full application build pipeline. The application is fairly simple, written in Python and using the pokeapi.co API library to get some useful Pokémon information!

The pipeline steps are as follows:

• Build

o Pipeline execution starts with a commit to a local GitLab repository

o Jenkins job builds a docker image out of the application

o Application is tested using a Docker workspace instance for the pipeline run

o Jenkins job pushes the new docker image to a local Harbor repository

• Deploy

o Provision a vSphere VM using Ansible to install Docker

o Pull Image from Harbor repository and run docker on the VM

• Test

o Test the application using a REST API action

o Validate response code

• Notify

o Send email to the user that requested the pipeline trigger

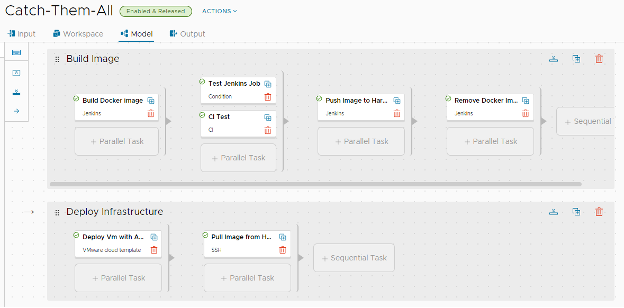

Here we can see the ‘Catch-Them-All’ application pipeline flow:

As you can see, there is a lot going on in here. Let’s start with the basic Code Stream configurations.

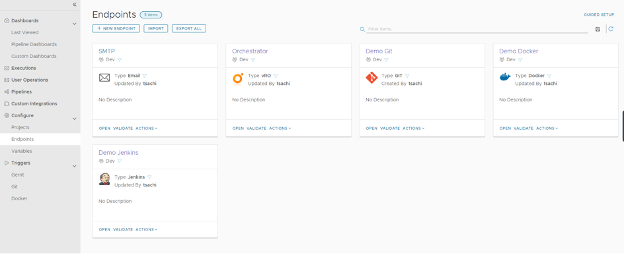

Endpoints:

On this page, I have configured the required tools that we will be using in our project.

1. SMTP server for email notifications

2. vRO automation for sending notifications via other communication channels (e.g. Slack)

3. GitLab repository that will store our application files (and the Jenkins files)

4. Jenkins server, which will run Jobs and SSH commands

5. Docker – Although we have a docker integration in our Code Stream, in this example, I will be using a live docker deployment for the purpose of the app lifecycle.

The docker image is being built when the pipeline is triggered; the image will be pushed from a shared repository.

Next, under the Variables pages, we can see global variables we can preconfigure to be used within our pipelines. This is useful when using API tokens or passwords that are shared across multiple projects and pipelines.

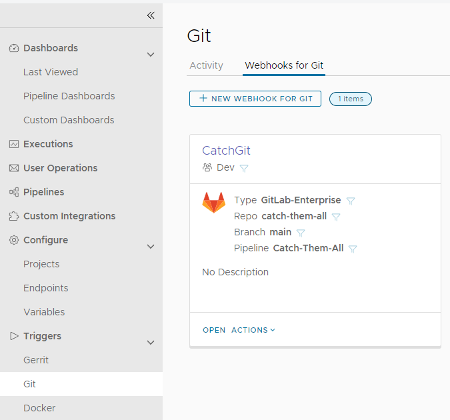

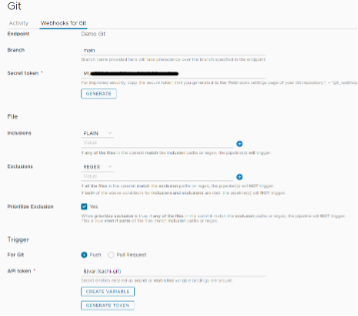

Triggers:

Under this section, we can create Webhooks to trigger our pipelines.

The triggers can be modified to be applied to certain conditions. Inclusions and Exclusions can be configured to trigger the pipeline only with certain code changes, specific users, branches, etc.

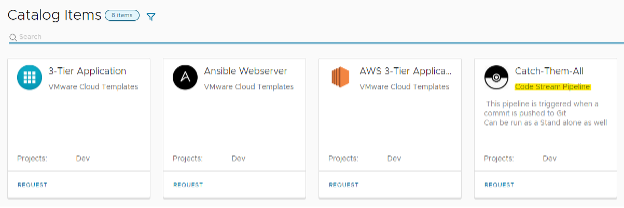

Of course, in order to run a pipeline, we can also publish it directly to our vRA Service Broker!

Pipeline Tasks:

Let’s go over our stages and briefly explain each task.

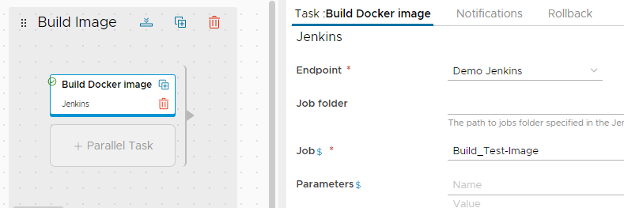

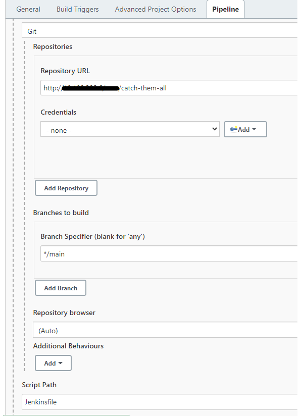

First, the Jenkins job is configured using the Jenkins file that is being pulled from the Git repository. Here is the Jenkins file representation and configuration:

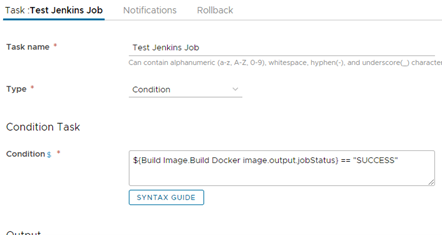

Next, we use the built-in condition validations. Here we will be validating that the docker image build is successful:

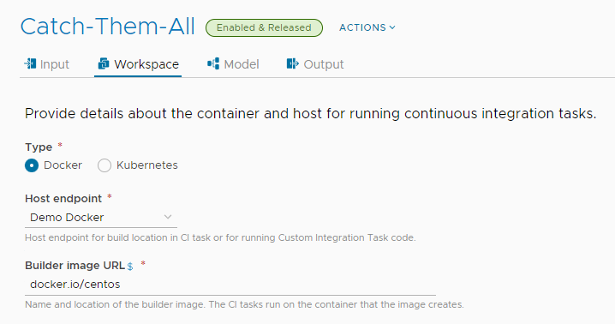

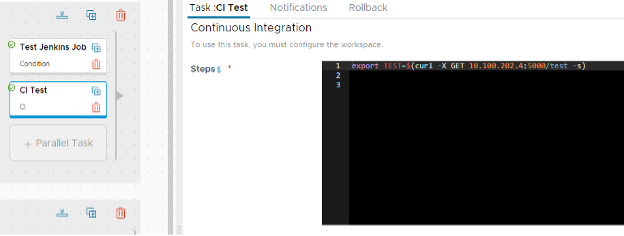

Using a parallel task, we are simultaneously running a CI test as well, using our Docker workspace to run a curl command:

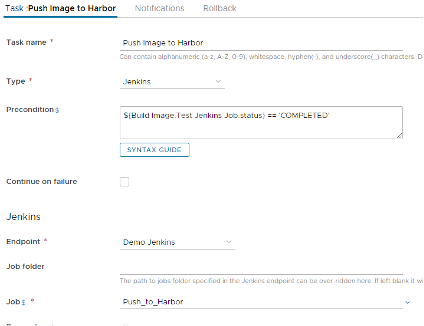

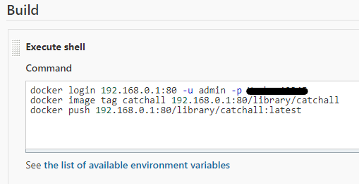

Next, using Jenkins again, we push the new Docker image to our Image repository (local Harbor instance):

In this screenshot, you can see that I have also defined a precondition for this job to run. Once the last Jenkins job status is set to ‘Completed,’ we can run the Jenkins Job ‘Push To Harbor.’ This job uses using a simple shell command to execute the push.

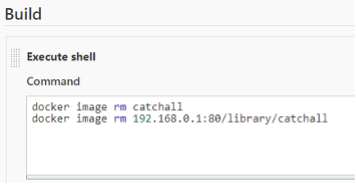

The next step will be deleting the docker image from the Jenkins server, as well as a Jenkins Shell job.

We now need to deploy our infrastructure so we can run our application.

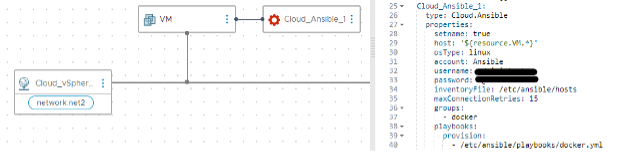

Here we will be using a Cloud Assembly Cloud Template integrated with an Ansible server to run a simple playbook that will install Docker on the provisioned VM.

We can see the YAML from our Cloud Assembly provisioning the Docker playbook on our VM.

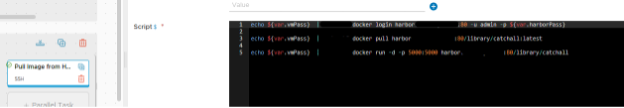

Now we will be using a remote SSH session to our newly deployed VM to pull and run our docker image.

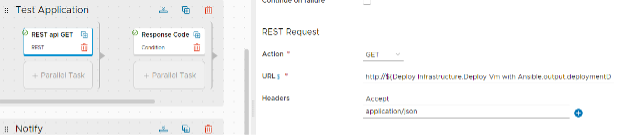

The application is now ready to be tested as a whole. For this purpose, let’s use the Code Stream REST tool. All we need to do is enter the Method, the URI, and the request headers:

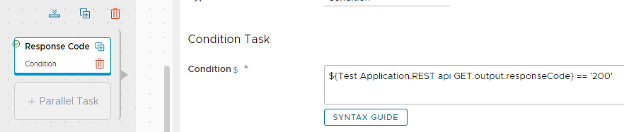

After which, we will verify the response code with a ‘Condition’ task:

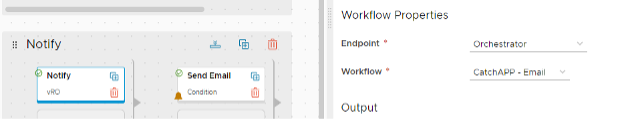

Finally, our application user will need to be notified that the pipeline has been triggered and completed.

To summarize, we have seen that as a part of the vRealize Suite, Code Stream (together with other vRA services) can be dynamically extended and used in countless use cases, whether it will be the built-in integrations or by exploiting its extensibility features to make almost anything possible.

This blog was contributed by:

Yev Berman (Hybrid Cloud & Automation Team Leader), Tsachi Benassayag (Hybrid Cloud Solution Specialist) and Sagi Ilan (Hybrid Cloud & Automation Senior Consultant)