Blog

8 May, 2024

July 17, 2023

Many of us have encountered multi-cloud projects where accessing resources across different cloud platforms becomes necessary. As I recently worked on a project that required secure and efficient access to AWS services from GKE pods, I want to share how I accomplished this integration.

Instead of following the naive and insecure approach of attaching static AWS credentials to the pods, which could compromise security, I explored alternative solutions. I’ll walk you through a step by step guide of how I deployed external-dns on GKE and configured it to access AWS Route53.

Let’s start with AWS side.

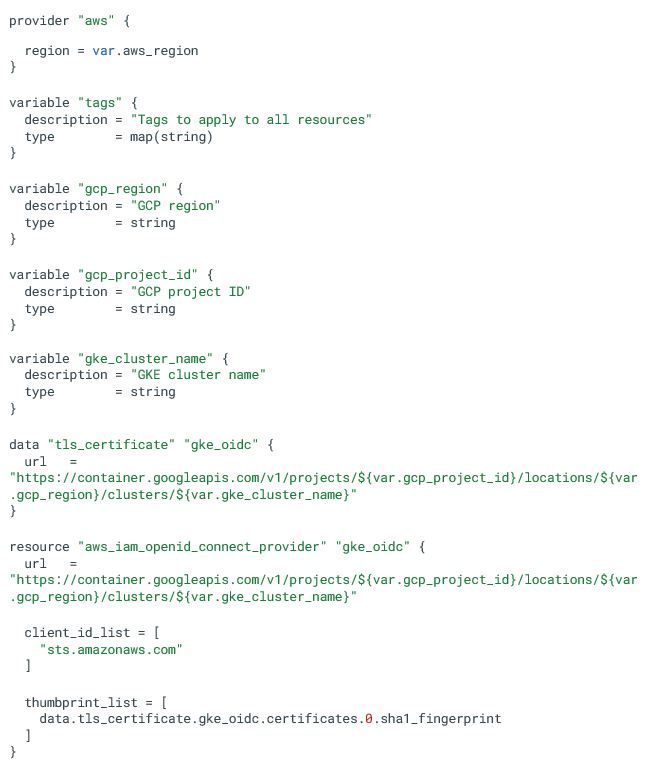

Using Terraform, I created an OIDC provider for GKE with the following configuration:

This configuration establishes an OIDC provider on AWS that corresponds to the GKE cluster’s API resources URL. By configuring the URL this way, we essentially “trick” the pods into reaching out to this URL when they attempt to assume an AWS role. However, instead of receiving the response directly from the GKE cluster, they will receive it from AWS.

Additionally, take note of the client ID (Identifier) of the AWS OIDC provider, which is set to “sts.amazonaws.com”.

When a pod requests a token from AWS, the request will automatically be directed to our AWS OIDC provider based on this client ID.

This setup ensures that pods in the GKE cluster can seamlessly assume roles and receive the necessary tokens from AWS using the OIDC provider.

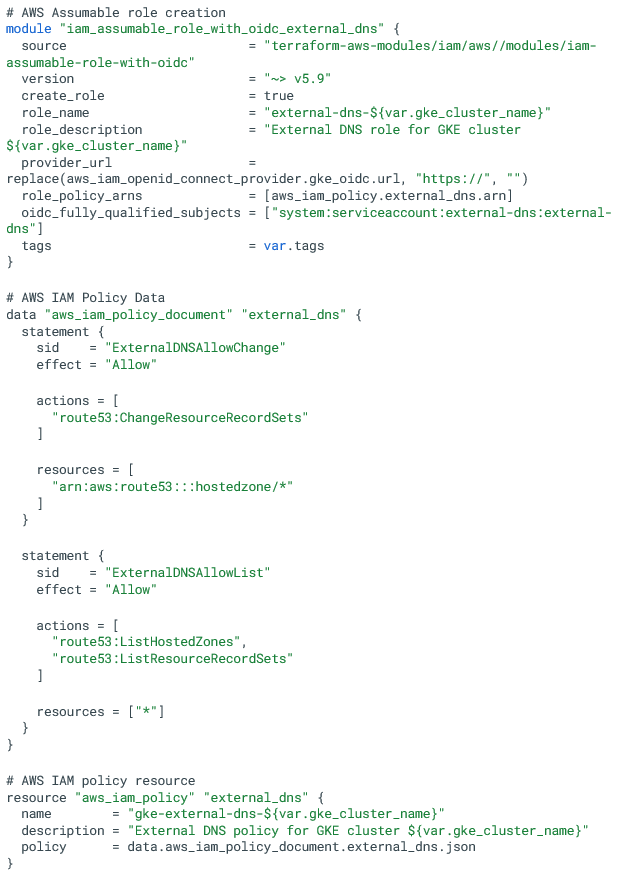

Next, I created an AWS assumable role and an IAM policy for external-dns using Terraform AWS official modules:

Note the “oidc_fully_qualified_subjets” section, which configured the OIDC provider to accept requests only from the “external-dns” service account, which lives in the “external-dns” namespace.

Moving on to the GCP side:

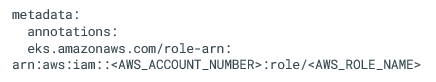

We need to set specific environment variables for pods to assume the AWS role. In the case of Amazon EKS, AWS has developed a pod identity webhook that automatically sets these variables based on the service account’s annotation:

Annotation:

On the GKE cluster, first, we need to deploy the EKS pod identity webhook.

That can be achieved by applying resources from the EKS pod identity webhook GitHub Repository.

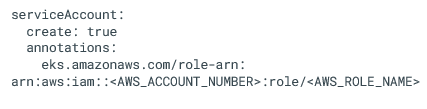

Finally, let’s deploy External-dns using Helm:

Create a ‘values.yaml’ file with the following content:

Make sure you edit <AWS_ACCOUNT_NUMBER> and <AWS_ROLE_NAME> accordingly.

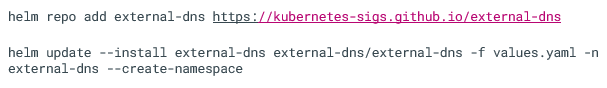

Deploy everything with Helm:

In conclusion, with this setup, your GKE pods should be able to access AWS services securely and efficiently.

Written by: Yarden Weissman, Cloud Native Engineer

References:

https://github.com/TeraSky-OSS/GKE-to-AWS

EKS Pod Identity Webhook

External DNS

Cross Account AWS IAM Assumable roles